Classification of Fractures

for nearly as long as people have identified fractures; they certainly

predate the advent of radiography. Even in the earliest written

surviving medical text, the Edwin Smith Papyrus, there was a

rudimentary classification of fractures. If a fracture could be

characterized as “having a wound over it, piercing through”—in other

words, an open fracture—it was determined to be an “ailment not to be

treated.” This early form of one of the earliest systems of fracture

classification served both to characterize the fracture and to guide

the treatment.

classification have served numerous purposes: to characterize fractures

as far as certain general and specific features, to guide treatment,

and to predict outcomes. This chapter will review the purposes and

goals of fracture classification, the history of the use of such

systems, and the general types of fracture classification systems in

common use today. This chapter will also provide a critical analysis of

the effectiveness of fracture classification systems, as well as some

of the limitations of these systems. Finally, it will comment on the

possible future of fracture classification systems.

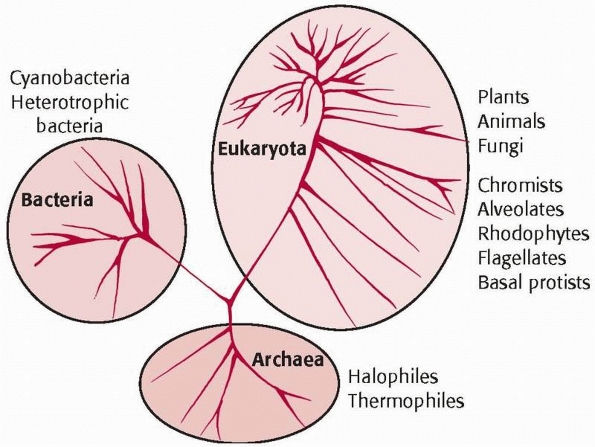

not unique to orthopaedics or to fractures. Taxonomy is a universal

phenomenon that occurs in all fields of science and art. One clear and

simple example is the system of taxonomy that has been used to divide

the natural world into three kingdoms: animals, plants, and bacteria (Fig. 2-1).

This taxonomy, though simple, is a perfect example of the kinds of

classification that permeate the world of arts and sciences and of the

first general purpose of classification systems—to name things.

|

|

FIGURE 2-1 Balloon diagram of taxonomy of the natural world.

|

|

TABLE 2-1 Purposes of Classification Systems

|

||||||

|---|---|---|---|---|---|---|

|

describe things according to characteristics and to provide a hierarchy

of those characteristics. A group of common descriptors are created so

that individual items can be classified into various groups. Groups are

then ordered into a hierarchy according to some definition of

complexity. A simple example of this is the phylogeny used to describe

the animal kingdom; this system describes and groups animals according

to common characteristics, and then orders those groups in a hierarchy

of complexity of the organism. This is, in principle, analogous to many

fracture classification systems, which provide a group of common

descriptors for fractures that are ordered according to complexity.

action or intervention. This feature of classification systems is not

universally seen, and it is generally present only in classification

systems that are diagnostic in nature. This introduces one of the key

distinctions among classification systems—that between systems used for

description and characterization and those used to guide actions and

predict outcomes. For example, the classification system for the animal

kingdom names and classifies animals, but it is descriptive only—it

does not guide the observer in any suggested action. In orthopaedic

practice, however, physicians use fracture classification systems to

assist in making treatment decisions. In fact, many fracture

classification systems were designed specifically for the purpose of

guiding treatment. We should have higher expectations of the validity

and integrity of systems that are used to guide actions than those used

purely as descriptive tools.

assist in predicting outcomes of an intervention or treatment. The

ability to reliably predict an outcome from a fracture classification

alone would be of tremendous benefit, for it would allow physicians to

counsel patients, beginning at the time of injury, about the expected

outcome. This ability would also assist greatly in clinical research,

as it would allow the comparison of the results from one clinical study

of a particular fracture to that of another. It should be clear to the

reader that, for a classification system to reliably predict outcome, a

rigorous analysis of the reliability and validity of the classification

system is necessary. Table 2-1 summarizes the

purposes of classification systems, along with the level of reliability

and validity necessary for high performance of the system.

have radiographs. The Edmund Smith Papyrus, while it did not make a

clear distinction between comminuted and noncomminuted fractures,

clearly classified fractures as open or closed, and provided guidelines

for treatment based on that classification. Open fractures, for

example, were synonymous with early death in the Ancient Egypt, and

these fractures were “ailments not to be treated.”

discovery of radiographs, there were in existence fracture

classification systems that were based on the clinical appearance of

the limb alone. The Colles fracture of the distal radius, in which the

distal fragment was displaced dorsally—causing the dinner fork

deformity of the distal radius—was a common fracture. Any fracture with

this clinical deformity was considered a Colles fracture and was

treated with correction of the deformity and immobilization of the limb.15

The Pott fracture, a fracture of the distal tibia and fibula with varus

deformity, was likewise a fracture classification that was based only

on the clinical appearance of the limb.58

These are but two examples of fracture classifications that were

accepted and in widespread use prior to the development of radiographic

imaging.

systems expanded in number and came into common usage. Radiography so

altered the understanding of fractures and the methods of fracture care

that nearly all fracture classification systems in use today are based

solely on a characterization of the fracture fragments on plain

radiographs. Most modern fracture classification systems are based on a

description of the location, number, and displacement of fracture lines

viewed on radiographs, rather than on the clinical appearance of the

fractured limb. While countless fracture classification systems based

on radiographs have been described in the past century for fractures in

all parts of the skeleton, only the most enduring classification

systems remain in common usage today. Examples of these enduring

classification systems are the Garden30 and Neer51

classification systems of proximal femoral and proximal humeral

fractures, respectively. These and other commonly used classification

systems will be discussed in more detail in a later part of this

chapter.

are based upon having observers—usually orthopaedic physicians —make

judgments and interpretations based on the analysis of plain

radiographs of the fractured bone. Usually, anteroposterior and lateral

radiographs are used, although some fracture classification systems

allow for or encourage the use of additional x-ray views, such as

oblique radiographs, or internal and external rotation radiographs. It

is evident that each decision made in the process of classifying a

fracture is based on a human’s interpretation of the often complex

patterns of shadows evident on a plain radiograph of the fractured

limb. This, in turn, requires that the observer have a detailed and

fundamental understanding of the osteology of the bone being imaged and

of the fracture being classified. The observer must have the ability to

accurately and completely identify all of the fracture lines,

understand the origin and nature of all of the fracture fragments, and

delineate the relationship of all of the fracture fragments to one

another. Finally, the procedure of fracture classification requires

that the observer very accurately quantify the amount of displacement

or angulation of each fracture fragment from the location in which it

should be in the nonfractured situation.

been added by many observers to assist in classifying fractures. In

most cases, the CT scan data has been used and applied

to

a classification system that was devised for use with plain radiographs

alone. There are a few classification systems, however, that are

specifically designed for use with CT imaging data. The most well-known

example of such a system is the Sanders classification system for

fractures of the calcaneus,61 which was designed for use with a carefully defined semicoronal CT sequence through the posterior facet of the subtalar joint.

|

|

FIGURE 2-2 Tibial fracture as seen on radiograph (A) and intraoperatively (B). The x-ray appearance greatly underestimates the overall severity of the injury.

|

relied solely on radiographic images to classify the fracture, guide

treatment, and predict outcomes. It is becoming increasingly

appreciated, however, that nonradiographic factors such as the extent

of soft tissue injury when there are other injuries (skeletal or

nonskeletal), medical comorbidities, and various other nonradiographic

factors have a large effect on treatment decisions and on the outcomes

of fracture treatment.23,42 These factors, however, are not accounted for in radiographic systems for fracture classification.

to fully appreciate the extent of soft tissue damage that has occurred,

and the image provides no information about the patient’s medical

history. For example, if one views a radiograph of the transverse

tibial shaft fracture shown in Figure 2-2, one

may conclude that this is a simple, low-energy injury. In this example,

however, the fracture occurred as a result of very high energy, and the

patient sustained extensive soft tissue damage. In addition, the

patient was an insulin-dependent diabetic with severe peripheral

neuropathy and skin ulcerations on the fractured limb. There is no way,

from view of the plain radiographs or application of a fracture

classification based on radiographs alone, to account for these

additional factors. The patient in this example required amputation, a

treatment that would not be predicted by review of the radiographs

alone. Some discussion of the role of classifying the soft tissue

injury in characterizing fractures will take place later in this

chapter.

can be characterized into three broad categories: (i) those that are

fracture specific, which evolved around and were generated for the

classification of a single fracture in a single location in the

skeleton; (ii) those that are generic or universal fracture

classification systems, which apply a single, consistent methodology to

the classification of fractures in all parts of the human skeleton; and

(iii) those that attempt to classify the soft tissue injury. It is

beyond the scope of this chapter to discuss individually all the

fracture classification systems now in common usage, but it is

important for the reader to understand the differences between the

general types of classification systems. For that reason, some examples

of each of the three types of fracture classification systems will be

discussed.

is a longstanding fracture classification system that describes the

displacement and angulation of the femoral head on anteroposterior (AP)

and lateral radiographs of the hip (see Fig. 47-2).

The classification is essentially a descriptive one, describing the

location and displacement of the fractured femoral neck and head. The

fracture types are ordered, however, to indicate increasing fracture

severity, greater fracture instability, and higher risk of

complications with attempts at reduction and stabilization of the

fracture. This feature of ordering fracture types by severity takes the

classification system from a nominal system to an ordinal system.

Garden types 1 and 2 fractures are considered to be stable injuries and

are frequently treated with percutaneous internal fixation. Garden 3

and 4 fractures have been grouped as unstable fracture patterns and,

while closed reduction and internal fixation are used in some

circumstances, most Garden 3 and 4 fractures in elderly patients are

treated with arthroplasty.

is an example of another descriptive classification system that has

been widely utilized and is based on the location of the major fracture

line in the proximal tibia and the presence or absence of a depressed

segment of the articular surface of the proximal tibia (see Fig. 53-9). This fracture classification is not dependent on the amount of displacement or depression

of the articular fractures, but only on the location of the fracture

lines. The Schatzker classification seems very simple, but it also

demonstrates some of the areas of confusion that can result from

fracture classifications. For example, Schatzker V and VI fractures are

distinct fracture types in the system, but observers have a great deal

of difficulty in distinguishing these two fracture types from one

another when viewing fracture radiographs. Also, the Schatzker VI

fracture group includes fractures classified as types C1 and C3 by the

AO/OTA system (described below), thus demonstrating an area of

inconsistency between two commonly used but different systems for

classifying the same fracture that can lead to confusion among

observers.

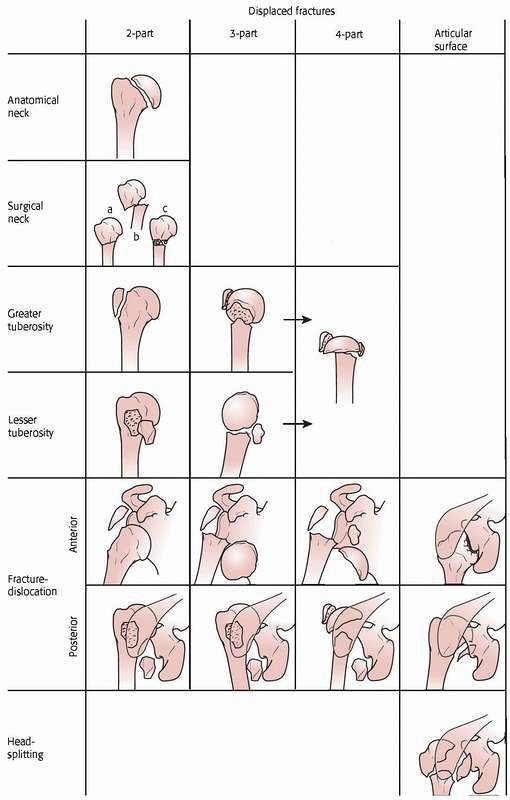

It is based on how many fracture “parts” there are—a part is defined as

a fracture fragment that is either displaced more than one centimeter

or angulated more than 45 degrees. The Neer classification groups

fractures into nondisplaced (one-part), two-part, three-part, or

four-part fractures. Nondisplaced fractures in the Neer system involve

several fracture lines, none of which meet the displacement or

angulation criteria to be considered a “part.” Two-part fractures in

the Neer system can represent either a fracture across the surgical

neck of the humerus or greater tuberosity fracture that is displaced.

Three-part fractures classically involve the humeral head, in greater

tuberosity fragments being displaced or angulated. Four-part fractures

involve displacement or angulation of the humeral head and greater and

lesser tuberosities. The reader should note that, in addition to

correct identification of the fracture fragments, this classification

system requires the observer to make careful and accurate measurements

of fragment displacement and angulation to determine if a fragment

constitutes a part.

is an example of a widely used system that is based primarily on the

mechanism of injury. The system makes use of the fact that particular

mechanisms of injury to the ankle will result in predictable patterns

of fracture to the malleoli. The appearance of the fracture on the

radiographs, then, is used to infer the mechanism of the injury. The

injuries are classified according to the position of the foot at the

time of injury and the direction of the deforming force at the time of

fracture. The position of the foot is described as pronation or

supination, and the deforming force is categorized as external

rotation, inversion, or eversion. This creates six general fracture

types, which are essentially nominal—they are not ordered into

increasing injury severity. Within each fracture type, however, there

is an ordinal scale, with varying degrees of severity being assigned to

each type (1-4) according to the fracture pattern. With this

classification system, correct determination of the fracture type can

guide the manipulations necessary to affect fracture reduction —the

treating physician must reverse the direction of the injuring forces to

achieve a reduction. For example, internal rotation is required to

achieve reduction of a supination external rotation fracture pattern.

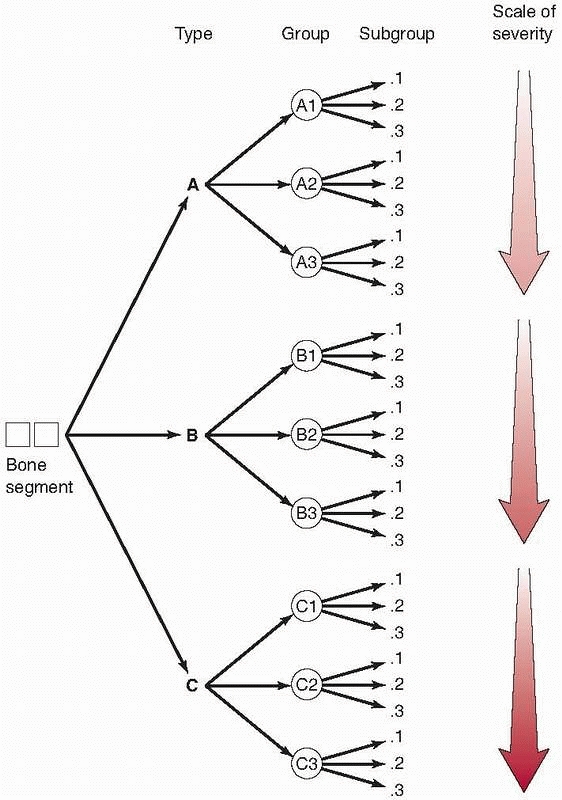

is essentially the only generic or universal system in wide usage

today. It is universal in the sense that the same fracture

classification system can be applied to any bone within the body. This

classification system was devised through a consensus panel of

orthopaedic traumatologists who were members of the Orthopaedic Trauma

Association and is based upon a classification system initially

developed and proposed by the AO/ASIF group in Europe.49,50

The Orthopaedic Trauma Association believed there was a need for a

detailed universal system for classification of fractures to allow for

standardization of research and communication among orthopaedic

surgeons. The AO/OTA fracture classification system is an alphanumeric

system that can be applied to most bones within the body.

-

Which bone? The major bones in the body are numbered, with the humerus being #1, the forearm #2, the femur #3, the tibia #4, and so on (Fig. 2-4).

-

Where in the bone is the fracture?

The answer to this question identifies a specific segment within the

bone. The second number of the coding system is applied to the location

in the bone. In most long bones, the diaphyseal segment (2) is located

between the proximal (1) and distal (3) segments. The dividing lines

between the shaft segment and the proximal and distal segments occur in

metaphysis of the bone. The tibia is assigned a fourth segment, which

is the malleolar segment. An example of the application of answering

the first two questions of the AO/OTA classification is that a fracture

of the midshaft of the femur will be given a numeric classification of

32 (3 for femur, 2 for the diaphyseal segment) (Fig. 2-4). -

Which fracture type?

The fracture type in this system can be A, B, or C, but these three

types are defined differently in diaphyseal fractures and fractures at

either end of the bone. For diaphyseal fractures, the type A fracture

is a simple fracture with two fragments. The type B diaphyseal fracture

has some comminution, but there can still be contact between the

proximal and distal fragments. The type C diaphyseal fracture is a

highly comminuted or segmental fracture with no contact possible

between proximal and distal fragments. For proximal and distal segment

fractures, type A fractures are considered extra-articular, type B

fractures are partial articular (there is some continuity between the

shaft and some portion of the articular surface), and Type C fractures

involve complete disruption of the articular surface from the

diaphysis. An example of this portion of the classification system is

shown in Figure 2-4. -

Which group do the fractures belong to?

Grouping further divides the fractures according to more specific

descriptive details. Fracture groups are not consistently defined; that

is, fracture groups are different for each fracture type. Complete

description of the fracture groups is beyond the scope of this chapter. -

Which subgroup?

This is the most detailed determination in the AO/OTA classification

system. As is the case with groups, subgroups differ from bone to bone

and depend upon key features for any given bone in its classification.

The intended purpose of the subgroups is to increase the precision of

the classification system. An in-depth discussion of this fracture

classification is beyond the scope of this chapter, and the reader is

referred to the references for a more detailed description of this

universal fracture classification system.

|

|

FIGURE 2-3

The Neer four-part classification of proximal humerus fractures. A fracture is displaced if the fracture fragments are separated 1 cm or greater, or if angulation between the fracture fragments is more than 45 degrees. A displaced fracture is either a two-, three-, or four-part fracture. (From Neer CS. Displaced proximal humeral fractures: I. classification and evaluation. J Bone Joint Surg 1970;52A:1077-1089, reprinted with permission from Journal of Bone and Joint Surgery.) |

|

|

FIGURE 2-4

The AO/ASIF Comprehensive Long Bone Classification applied to proximal humeral fractures. This system describes three types of proximal humerus fractures (types A, B, and C). Type A fractures are described as unifocal extra-articular (two-segment) fractures, type B as bifocal extra-articular (three-segment) fractures, and type C as anatomic neck or articular segment fractures. Each type includes three fracture patterns, with nine subgroups for each type of fracture. The subgroup classification indicates the degree of displacement. (Adapted from Müller ME, Allgower M, Schneider R, et al. Manual of Internal Fixation. New York: Springer-Verlag, 1991, with permission.) |

It is continually evaluated by a committee of the OTA, and is open for

change where appropriate. The reader should note that the AO/OTA

classification system of fractures, and its precursor, the AO/ASIF

system, were designed for delineation and recording of the maximum

possible amount of detail about the individual fracture pattern and

appearance on radiographs. The assumption made during the development

of these classification systems is that with specific

definitions/diagrams and a high degree of detail come greater accuracy

and a superior fracture classification system that could be applied by

any orthopaedic surgeon. It was believed such a system could

potentially result in better prognostic and research capabilities. As

will be discussed later in the chapter, greater specificity and detail

in a fracture classification system does not necessarily correlate well

with good performance of the classification system.

energy of the injury may be reflected in the soft tissue damage to the

extremity involved. If one sees a radiograph demonstrating a comminuted

fracture, it is often thought that it is a high-energy injury. However,

there may be other patient factors that come into consideration and may

lead to a comminuted fracture from a lower-energy mechanism. This may

be evident in an elderly patient with ground-level falls who has a

significantly comminuted distal humerus fracture. The energy of the

injury itself resulted only from a ground-level fall, but led to a

complex fracture type as a result of underlying osteoporotic bone. Some

of the value in the soft tissue classification system is in planning

the treatment as well as in predicting the outcome.

soft tissue injury is the open fracture. Early classification systems

for open fractures focused only on the size of the opening in the skin.

With time, however, it was recognized that the extent of muscle injury,

local vascular damage, and periosteal stripping are also of paramount

significance. Gustilo et al.32,33

developed the classification system now used by most North American

orthopaedists to describe open fractures. This classification system

takes into account the skin wound, the extent of local soft tissue

injury and contamination, and the severity of the fracture pattern (see

Table 12-2). The Gustilo classification system

originally included type I, type II, and type III fractures. However,

this system was modified later to expand the type III open fractures

into subtypes A, B, and C. It is important to note that the type III-C

fracture is defined as any open fracture in which there is an

accompanying vascular injury that requires repair.

The Gustilo classification system has been applied to open fractures in

nearly all long bones. It is important to recognize that this

classification system only can be applied fully after surgical

debridement of the open fracture has been performed. This system

has proven useful in predicting risk of infection in open tibial fractures.32

|

TABLE 2-2 Oestern and Tscherne Classification of Closed Fractures

|

|||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

according to the classification of Gustilo was investigated by Brumback

and Jones,10 who presented

radiographs and videotapes of surgical debridements to a group of

orthopaedic traumatologists who classified the fractures. They reported

an average interobserver agreement of 60%. The range of agreement,

however, was wide, ranging from 42% to 94%. Percentage agreement was

best for the most severe and the least severe injuries, and was poorer

for fractures in the middle range of the classification system. That

the classification system did not have similar reliability across the

spectrum of injury severity has been a criticism of this classification

system as a prognostic indicator for any but the least severe and most

severe injuries.

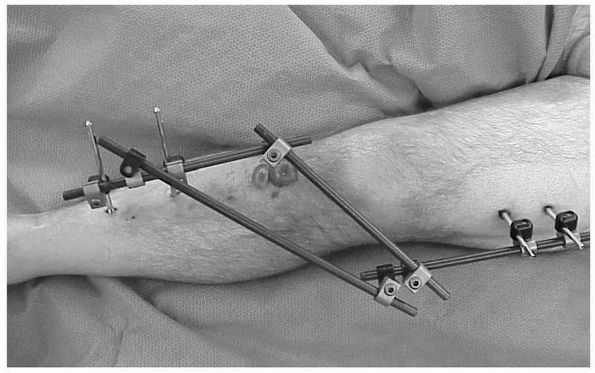

This system remains the only published classification system for the

soft tissue injury associated with closed fractures. Fractures are

assigned one of four grades, from 0 to 3. Figure 2-5

is an example of a patient with a Tscherne Grade 2 closed tibial

plateau fracture. Deep abrasions of the skin, muscle contusion,

fracture blisters, and massive soft tissue swelling, as in this

patient, may lead the surgeon away from immediate articular

stabilization and toward temporary spanning external fixation. No

studies have been done to determine the interobserver reliability of

the Tscherne system for the classification of the soft tissue injury

associated with closed fractures.

if it can assist in predicting outcome. A prospective study completed

by Gaston et al.31 assessed various

fracture classification schemes against several validated functional

outcome measures in patients with tibial shift fractures. The Tscherne

classification system of closed fractures was more predictive of

outcome than the other classification systems used. The Tscherne system

was most strongly predictive of time to return to prolonged walking or

running.

|

|

FIGURE 2-5 Example of a Tscherne II fracture of the proximal tibia.

|

Reliability reflects the ability of a classification system to return

the same result for the same fracture radiographs over multiple

observers or by the same observer when viewing the fracture on multiple

occasions. The former is termed interobserver reliability, or the

agreement between different observers using the classification system

to assess the same cases. The latter is termed intraobserver

reproducibility—the agreement of the same observer’s assessment, using

the classification system, for the same cases on repeated occasions.

The validity of a classification system reflects the accuracy with

which the system describes the true fracture entity. A valid

classification system would correctly categorize the fracture in a

large percentage of cases, when compared to a “gold standard.”

Unfortunately, there is no such gold standard for fracture

classification—not even observation at surgery can be considered

infallible—so the assessment of the performance of fracture

classification systems must be confined to assessing interobserver

reliability and intraobserver reproducibility.

the terms “agreement” and “accuracy” in reference to the performance of

fracture classification systems, as well as which of these terms is the

best measure of a system’s performance. The term “accuracy” implies

that there is a correct answer or a gold standard against which

comparisons can be made, validated, and determined to be true or false.

However, the term “agreement” indicates that there is no defined gold

standard and that unanimous agreement among all observers that might

classify a given fracture is the highest measure of performance of a

classification system. These two terms are not congruent and they are

not interchangeable. Each is tested by a vastly different statistical

method and to optimize each would require a radically different method

for generating and validating a fracture classification

system.

It has been unclear at times whether those developing and applying

classification systems today are expecting the classification to serve

as a gold standard or they are attempting to develop the classification

to achieve optimal agreement among observers.

appear in the orthopaedic literature assessing the interobserver

reliability of various fracture classifications systems.2,3,28,39,53,59,67,73 In a controversial editorial published in 1993, Fracture Classification Systems: Do They Work and Are They Useful, Albert Burstein, Ph.D., arrayed some important issues and considerations for fracture classification systems.11

He stated that classification systems are tools, and that the measure

of whether such a tool works is if it produces the same result, time

after time, in the hands of anyone who employs the tool. Dr. Burstein

went on to say that “any classification scheme, be it nominal, ordinal,

or scalar, should be proved to be a workable tool before it is used in

a discriminatory or predictive manner.” He emphasized that the key

distinction for a classification system was between its use to describe

and characterize fractures and its use to guide treatment or predict

outcomes. It is the latter use that requires a system to be proven to

be a valid tool; the minimum criteria for acceptable performance of any

classification system should be a demonstration of a high degree of

interobserver reliability and intraobserver reproducibility.

editorial appeared, and nearly all concluded that fracture

classification systems had substantial interobserver variability.

Classification systems for fractures of the proximal femur,2,28,53,56,64,73 proximal humerus,7,9,39,67,68 ankle,16,52,59,64,73 distal tibia,22,45,71 and tibial plateau,12,46,75,76,77

among others, were all shown to have poor to slight interobserver

reliability. The earliest of these studies looked only at the observed

percentage of agreement —the percentage of times that individual pairs

of observers categorized fractures into the same category. Subsequent

studies, however, have most frequently used a statistical test known as

the kappa statistic, a test that analyzes pair-wise comparisons between

observers applying the same classification system to a specific set of

fracture cases. The kappa statistic was originally introduced by Cohen

in 1960,13 and the kappa statistic

and its variants are the most recognized and widely used methods for

measuring reliability for fracture classification systems. The kappa

statistic adjusts the proportion of agreement between any two observers

by correcting for the proportion of agreement that could have occurred

by chance alone. Kappa values can range from + 1.0 (perfect agreement)

to 0.0 (chance agreement) to – 1.0 (perfect disagreement) (Table 2-3).

are only two choices of fracture categories or when the fracture

classification system is nominal—all categorical differences are

equally important. In most situations, however, there are more than two

categories into which a fracture can be classified, and fracture

classification systems are ordinal—the categorical differences are

ranked according to injury severity, treatment method, or presumed

outcome. In these cases, the most appropriate variant of the kappa

statistic to be used is the weighted kappa statistic, described by

Fleiss,26,27

in which some credit is given to partial agreement and not all

disagreements are treated equally. For example, in the Neer

classification of proximal humeral fractures, disagreement between a

nondisplaced and a two-part fracture has far fewer treatment

implications than disagreement between a nondisplaced and a four-part

fracture. By weighting kappa values, one can account for the different

levels of importance between levels of disagreement. However, the most

appropriate use of the weighted kappa statistic should include a clear

explanation of the weighting scheme selected, since the results of the

kappa statistic will vary—even with the same observations—if the

weighting scheme varies.29 Thus,

without specific knowledge of the weighting scheme used, it is

difficult to compare the results of fracture classification system

reliability across different studies.

|

TABLE 2-3 Range of the Kappa Statistic

|

||||||||

|---|---|---|---|---|---|---|---|---|

|

have been used to categorize kappa values; values less than 0.00

indicate poor reliability, 0.01 to 0.20 indicate slight reliability,

0.21 to 0.40 indicate fair reliability, 0.41 to 0.60 indicate moderate

reliability, 0.61 to 0.80 indicate substantial reliability, and 0.81 to

1.00 indicate nearly perfect agreement. Although these criteria have

gained widespread acceptance, the values were chosen arbitrarily and

were never intended to serve as general benchmarks. A second set of

criteria, also arbitrary, have been proposed by Svanholm et al.: less

than 0.50 indicate poor reliability; 0.51 to 0.74 indicate good

reliability, and greater than 0.75 indicate excellent reliability.69

been found to be a limitation of many fracture classification systems.

Many studies have documented only fair to poor intraobserver

reliability for a wide range of fracture classification systems.

Systems tested have included, among others, the Neer fracture

classification system of proximal humeral fractures,7,8,39,67 the Garden classification systems of proximal femoral fractures,2,28,53,56,64 the Rüedi and Allgöwer and AO classification systems of distal tibial fractures,22,45,71 the Lauge-Hansen and Weber classification of malleolar fractures,16,52,72,73 and the Schatzker and AO fracture classification system of proximal tibial fractures.12,46,75,76,77

Even the Gustilo-Anderson classification system for classifying open

fractures has been shown to have only fair interobserver reliability.10

Additionally, studies have shown observer variability in classifying

various other orthopaedic injuries, such as fractures of the acetabulum,6,57,74 the distal radius,1,3,36 the scaphoid,20 the spine,5,55 the calcaneus,35,41 and gunshot fractures of the femur.66

sources of this variability, but the root cause for the variability has

not been identified. It remains unknown if any system for the

classification of fractures can perform with excellent intraobserver

reliability when it will be used by many observers. A methodology for

validation for fracture classification systems has been proposed, but

it is highly detailed and extremely time consuming and it is unknown if

it can be practically applied.4

the reliability of fracture classification systems should clearly state

the weighting scheme used. Methodological issues such as this were

evaluated in a systematic review of 44 published studies assessing the

reliability of fracture classification systems.4

Various methodological issues were identified, including a failure to

assure that the study sample of fracture radiographs was representative

of the spectrum and frequency of injury severity seen for the

particular fracture in 61% of the studies, a failure to justify the

size of the study group in 100% of the studies, and inadequate

statistical analysis of the data in 61% of the studies. While the

authors of this study used very rigid and, some would argue, unfairly

rigorous criteria to evaluate these studies, the authors’ conclusion

that reliability studies of fracture classification cannot be easily

compared to one another is valid and appropriate. The development and

adoption of a systematic methodological approach to the development and

validation of new fracture classification systems seems appropriate and

is needed.

a fracture classification scheme correlates well with outcomes

following fracture care.70 In a

prospective, multicenter study, 200 patients with unilateral isolated

lower extremity fractures (acetabulum, femur, tibia, talus, or

calcaneus) underwent various functional outcome measurements at 6 and

12 months, including the Sickness Impact Profile and the AMA Impairment

rating. The AO/OTA fracture classification for each of these patients

was correlated with the functional outcome measures. While the study

indicated some significant differences in functional outcome between

type C and type B fractures, there was no significant difference

between type C and type A fractures. The authors concluded that the

AO/OTA code for fracture classification may not be a good predictor of

6- and 12-month functional performance and impairment for patients with

isolated lower extremity fractures.

some of the reasons for interobserver variation in the classification

of fractures. These studies have generally focused on a few specific

variables or tasks involved in the fracture classification process.

Some of those which have been investigated are discussed in the

following paragraphs.

clinical practice and may affect the observer’s ability to accurately

or reproducibly identify and classify the fracture. Many have

attributed observed intraobserver variability in fracture

classifications systems to variations in the quality of radiographs.1,7,28,37,39,67

Studies looking specifically at this variable, however, have not

demonstrated it to be a significant source of intraobserver variability.16,22

In one such study involving classification of tibial plafond fractures

using the Rüedi and Allgöwer system, observers were asked to classify

the fractures, but also asked to make a determination of whether the

radiographs were of adequate quality to classify the fracture.22

In that study, observers agreed less on the quality of the radiographs

(mean kappa 0.38 + 0.046) than on the classification of the fractures

themselves (mean kappa 0.43 + 0.048). In addition, the extent of

interobserver agreement on the quality of the radiographs had no

correlation with the extent of agreement in classifying the fractures.

The authors concluded that, based on the results of their

investigation, it appeared that improving the quality of plain

radiographic images would be unlikely to improve the reliability of

classification of fractures of the tibial plafond.

as CT or magnetic resonance imaging (MRI) scanning, in which

high-quality images should always be obtained, have generally not

demonstrated improved intra-observer reliability over studies that have

used plain radiographs alone. Bernstein et al. found that CT scans did

not improve interobserver agreement for the Neer classification of

proximal humerus fractures.7 Chan et

al., in a study of the impact of a CT scan on determining treatment

plan and fracture classification for tibial plateau fractures, found

that viewing the CT scans did not improve interobserver agreement on

classification, but did increase agreement regarding treatment plan.12

Two studies investigating the effect of adding CT information to plain

radiographs on the interobserver agreement in classifying fractures of

the tibial plateau and tibial plafond failed to show a significant

improvement in agreement after the addition of CT scan information.12,46,47 Katz et al.,35

studying fractures of the distal radius, found the addition of a CT

scan occasionally resulted in changes in treatment plans and also

increased agreement among observers on the surgical plan in treating

these injuries. A study investigating the use of three-dimensional CT

scanning in distal humeral fractures concluded that three-dimensional

CT did not improve interobserver reliability over plain radiographs or

two-dimensional CT scans, but that it did improve intra-observer

reproducibility.25 These authors and

others have concluded that CT scan information may be a useful adjunct

in surgical planning for a severe articular fracture, but is probably

not required for the purpose of fracture classification.

Thirty-five distal radius fractures that had been classified as AO/OTA

A2 and A3 (extra-articular types) after radiographic review underwent

CT scanning. The scans revealed that 57% of the fractures had an

intra-articular component and had been inappropriately classified at

AO/OTA type A fractures. The reader should note that this study did not

attempt to determine interobserver reliability of the classification,

but simply that a single observer reviewing the CT scans disagreed with

the original fracture classification in 57% of cases. It remains

unproven whether CT scanning is a useful adjunct to improve

interobserver agreement in the classification of fractures.

interobserver reliability of classification of tibial plateau fractures

according to the Schatzker classification system.77

Three orthopaedic trauma surgeons classified tibial plateau fractures

first with plain radiographs, and then with either the addition of a CT

and an MRI scan. Kappa values averaged 0.68 with plain radiographs

alone, 0.73 with the addition of a CT scan, and 0.85 with addition of

an MRI scan. No statistical analysis was reported to indicate whether

the addition of CT and MRI information resulted in a statistically

significant improvement in reliability.

diagnostic image, usually a radiograph, on which the observer must make

observations, measurements, or both. Even with high-quality

radiographs, however, overlapping osseous fragments or densities can

make the accurate identification of each fracture fragment difficult.

Osteopenia can also increase the difficulty in accurate classification

of fractures. Osteopenic bone casts a

much

fainter “shadow” on radiographic films, making the delineation of fine

trabecular or articular details a much more difficult task for the

observer. Osteopenia represents a physiologic parameter that may affect

treatment plans and outcomes, but is not mentioned in any

classification system.

accurately classify with plain radiographs. Articular fractures tend to

occur in areas of the skeleton with complex three-dimensional

osteology, may be highly comminuted, and the classification systems

used for these fractures are predicated on the accurate identification

of each fracture fragment and determination of its relationship to the

other fragments and/or its position in the nonfracture situation.

Observer variability in the identification of these small fracture

fragments in complex fractures would be expected to lead to poorer

interobserver reliability of the fracture classification system.

Dirschl et al. investigated the observers’ ability to identify small

articular fragments in classifying tibial plafond fractures according

to the Rüedi and Allgöwer classification.22

Observers classified 25 tibial plafond fractures on radiographs and

then on line drawings that had been made from those radiographs by the

senior author; interobserver reliability was no different in the two

situations. At a second classification session, observers were asked to

first mark, on the fracture radiographs, the articular fragments and

then to classify the fractures; in a final session, the observers

classified the radiographs after the fracture fragments had been

premarked by the senior author. Having observers mark the fracture

fragments resulted in no improvement in interobserver reliability of

the fracture classification system. When identification of the

articular fragments was removed from the fracture classification

process, however, by having the fragments premarked by the senior

author, the interobserver reliability was significantly improved (mean

kappa value increased from 0.43 to 0.54, P<0.025).

The authors believed the results of this study indicated that observers

classifying fractures of the tibial plafond have great difficulty

identifying the fragments of the tibial articular surface on

radiographs. They went on to postulate that fracture classification

system predicated on the identification of the number and displacement

of small articular fragments may inherently perform poorly on

reliability analyses, because of observer difficulty in reliably

identifying the fracture fragments.

particularly articular fragments, has long been felt to be important in

characterizing fractures and has been used by many to make decisions

regarding treatment. Additionally, some classification systems for

fractures are predicated on the observer accurately identifying the

amount of displacement and/or angulation of fracture fragments; the

Neer classification system for proximal humeral fractures is an

example. Finally, the quality of fracture care has frequently been

judged by measuring the amount of displacement of articular fracture

fragments on posttreatment radiographs.

variability among observers in making measurements on radiographs and

that this may be a source for variability in fracture classification.

One such study assessed the error of measurement of articular

incongruity of tibial plateau fractures.47

In this study, five orthopaedic traumatologists measured the maximum

articular depression and the maximum condylar widening on 56 sets of

tibial plateau fracture radiographs. For 38 of the cases, the observers

also had a computed tomography scan of the knee to assist in making

measurements. The results of the study indicated that the 95% tolerance

limits for measuring maximum articular depression were ± 12

millimeters, and for measuring maximum condylar widening were ± 9

millimeters. This result indicates that there is substantial

variability in making these seemingly simple measurements.

of measurements decreases (the range of articular depression in the

study above was 35 millimeters). Thus, it would be expected that lower

tolerance limits would result from the measurement of reduced tibial

plateau than those observed in the reported study, which measured

injury films. However, in a study looking at the tolerance limits for

measuring articular congruity in healed distal radial fractures,

tolerance limits of ± 3 millimeters were identified, when the range of

articular congruity measurements was only 4 millimeters.36

reliability of measurement of articular fracture displacements. In one

study of intra-articular fractures of the distal radius, there was poor

correlation between measurement of gap widths or step deformities on

plain radiographs as compared to CT scans.14

Nearly one third of measurements made from plain radiographs were

significantly different than those made from CT scans. Another study

extended these findings by examining known intra-articular

displacements made in the hip joints of cadaveric specimens.8

The authors observed that CT-generated data were far more accurate and

reproducible than were data obtained from plain films. Moed et al.

reported on a series of posterior wall acetabular fractures treated

with open reduction and internal fixation in which reduction was

assessed on both plain radiographs and on CT scans.48

Of 59 patients who were graded as having an anatomic reduction based on

plain radiographs and for whom postoperative CT scans were obtained, 46

had a gap or step-off greater than 2 millimeters. These results may not

be characteristic of all fractures, since the posterior wall of the

acetabulum may be more difficult to profile using plain radiographs

than most areas of other joints.

observer variability in the routine measurement of articular

incongruity on radiographs. It also seems highly unlikely that

observers using plain radiographs can reliably measure small amounts of

incongruity. This suggests that improvements in our ability to reliably

assess the displacement of fracture fragments are necessary to reduce

variability in articular fracture assessment.

requiring the observer to choose between many possible categories in

characterizing a fracture. The AO/OTA system, for example, has up to 27

possible classifications for a fracture of a single bone segment (there

are three choices each for fracture type, group, and subgroup). It

seems reasonable that observers would find it easier to classify a

fracture if there were fewer choices to be made, and studies of the

AO/OTA fracture classification system have confirmed this. In nearly

all cases, for various fractures, classification of type can be

performed much more reliably than classification into groups or

subgroups.16,36,43,45,56,71,75 These studies concluded that, for optimal reliability, the use

of this classification beyond characterization of type was not recommended.

choices to no more than two for any step in the classification of

fractures would improve the ability of the observer to classify the

fracture and would improve interobserver reliability. In 1996, the

developers of the AO/ASIF comprehensive classification of fractures

(CCF) modified to incorporate binary decision-making.50

The reasoning was that, if observers could answer a series of “yes or

no” questions about the fracture, they could more precisely and

reliably classify the fracture. The modification was planned,

announced, and implemented without any sort of validation that the

modification would achieve the desired outcomes or that binary decision

making would improve reliability in fracture classification.

fracture types have evaluated whether binary decision making improves

reliability in the classification of fractures. The first of these

studies developed a binary modification of the Rüedi and Allgöwer

classification of tibial plafond fractures and had observers classify

25 fractures according to the original classification system and the

binary modification.22 The binary

modification was applied rigidly in fracture classification sessions

that were proctored by the author; observers were forced to make binary

decisions about the fracture radiographs, and not permitted to jump to

the final fracture classification. The results of this study indicated

that the binary modification of this classification system did not

perform with greater reliability than the standard classification

system (mean kappa 0.43 ± 0.048 standard and 0.35 ± 0.038 binary).

Another investigation compared the interobserver reliability of

classification of malleolar fractures of the tibia (segment 44)

according to the classic and binary modification of the AO/ASIF CCF.16

Six observers classified 50 malleolar fractures according to both the

standard and binary systems, and no difference in interobserver

reliability could be demonstrated between the two systems (mean kappa

0.61 standard and 0.62 binary). The authors concluded that strictly

enforced binary decision-making did not improve reliability in the

classification of malleolar fractures according to the AO/ASIF CCF. The

results of these two studies cast doubt on the effectiveness of binary

decision making in improving interobserver reliability in the

classification of fractures.

amount of information provided an observer could be overwhelming and

limit reliability of fracture classification.35

This group tested the Sanders classification of calcaneal fractures

and, rather than providing observers with the full CT scan data for

each of the 30 cases, they provided each observer with only one

carefully selected CT image from which to make a classification

decision. The results indicated that the overall interobserver

reliability was no better with only one CT cut than with the full

series of CT cuts. The results clearly showed, however, that

interobserver agreement was much better for the most and least severe

fractures in the series and poorest for fractures in the midrange of

severity. This finding is probably applicable to all classification

schemes, in which observers are much better at differentiating the best

from the worst than they are at cases in the middle of the spectrum of

injury severity.

are categorical; regardless of the nature or complexity of the

classification system, each group’s fractures are grouped into discreet

categories. Injuries to individual patients, however, occur on a

continuum of energy and severity of injury; fractures follow this same

pattern, occurring on a spectrum of injury severity. The process of

fracture classification can therefore be said to be a process by which

a continuous variable, such as fracture severity, is made a categorical

one. This “categorization” of a continuous variable may be a source of

intraobserver variability in fracture classification systems.29,45

One recent study concluded that “it has become clear that these

deficiencies are related to the fact that the infinite variation of

injury is a continuous variable and to force this continuous variable

into a classification scheme, a dichotomous variable, will result in

the discrepancies that have been documented.”29

The authors further suggested that “multiple classifiers, blinded to

the treatment selected and clinical outcomes, and consensus methodology

should be used to optimize the utility of injury classification schemes

for research and publication purposes.”

proposed that, instead of classifying fractures, perhaps fractures

should merely be rank ordered from the least severe to the most severe.

This would serve as a means to preserve the continuum of fracture

severity and has been proposed as a means of potentially improving

interobserver reliability. An initial study using this methodology in

tibial plafond fracture showed promise.19

Twenty-five tibial plafond fractures were ranked by three orthopaedic

traumatologists from the least severe to the most severe, and the group

demonstrated outstanding interobserver reliability, with a Cronbach

alpha statistic17 of 0.94 (nearly

perfect agreement). In a subsequent study, the rank order concept was

expanded and a series of 10 tibial plafond fractures were ranked by 69

observers.21 The intraclass

correlation coefficient was 0.62, representing substantial agreement,

but also represented some deterioration from the results with only

three observers. Based on these results, which are superior to those of

most categorical fracture classification systems that have been

evaluated, further study of this sort of classification system appears

to be warranted.

fracture classification system that ranks cases on a continuum of

injury severity would be to approach the matter much in the same way as

clinicians determine bone age in children.21

A series of radiographs would be published that represent the spectrum

of fracture severity, from the least severe to the most severe, and

then an observer would simply review these examples and determine where

the fracture under review lay on this spectrum of severity. This

concept is markedly different from any scheme used to date to classify

fractures, would be unlikely to completely replace other systems of

fracture classification, and may have weaknesses that have not yet been

determined. Such a system will require extensive testing and validation

before it could be widely used.

Recently, many have come to question whether any system for fracture

classification that relies solely on radiographic data will be highly

reliable or highly predictive of the outcome of severe fractures. There

is strong evidence that the

extent

of injury to the soft tissues (cartilage, muscle, tendon, skin, etc.),

the magnitude and durations of the patient’s physiologic response to

injury, the presence of comorbid conditions, and the patient’s

socioeconomic background and lifestyle may all play critical roles in

influencing outcomes following severe fractures.

articular cartilage is a critical and significant contributor to the

overall severity of an articular fracture, as evidenced by studies

documenting poor outcomes after osteochondritis dissecans and other

chondral injuries. The information present in the orthopaedic clinical

literature indicates that the severity of injury to the articular

surface during fracture has an important bearing on outcome and the

eventual development of posttraumatic osteoarthrosis. A better

understanding of the impaction injury to the articular cartilage and

the prognosis of such injury will be critical to improving our

assessment and understanding of severe intra-articular fractures.

Unfortunately, there currently are no imaging modalities that have been

validated to indicate to the clinician the extent of injury to the

cartilage of the articular surface and/or the potential for repair or

the risk of posttraumatic degeneration of the articular cartilage.

Plain radiographs and CT scans provide very little information about

the current and future health of the articular cartilage in a joint

with a fracture.

well-trained, will have some level of variability in applying any

tool—no matter how reliable—in classifying fractures. The magnitude of

the “baseline” level of inherent human variability in fracture

classification is entirely unknown. As such, it is extremely difficult

for investigators to know with precision what represents excellent

interobserver reliability in fracture classification. There is

disagreement over the best statistical analysis to use in assessing

reliability or what level of agreement is acceptable in studies on

fracture classification. Statistics such as the intraclass correlation

coefficient are very good as indicators of when a laboratory test, such

as the hematocrit or serum calcium level, has acceptable reliability

and reproducibility. Whether the same threshold level of reliability

should be applied to a process such as fracture classification is

unknown. Similarly, the interpretation of the weighted kappa statistic

for fracture classification is somewhat difficult, since there are few

guidelines to aid in interpreting their results. Landis and Koch admit

that their widely accepted reference intervals for the kappa statistic

were chosen arbitrarily. Additionally, a recent investigation seemed to

indicate that using the kappa statistic with a small number of

observers introduces the possibility of “sampling error” causing an

increased variance in the kappa statistic itself.4,57

Having many different observers causes stabilization of the kappa value

around a “mean value” for the agreement among the population of

observers. Invariably, however, using more observers results in a lower

mean kappa value and indicated poorer interobserver reliability of the

classification system being tested. Therefore, studies with a small

amount of observers that reported excellent reliability in fracture

classification systems may be reporting spuriously high results for the

kappa statistic—results that would be much lower if more observers were

used. Unfortunately, there are currently no better or more reliable

methods for reporting and interpreting interobserver reliability than

the use of the ICC or the kappa statistic.

describing fractures; this has been one of the best uses for fracture

classification systems. Using a well-known fracture classification to

describe a fracture to an orthopaedist or colleague who cannot

immediately view the fracture radiographs immediately invokes in the

orthopaedist a visual image of the fracture. This visual image, even if

it is not highly reliable to statistical testing, enhances

communication between orthopaedic physicians.

educational tools. Educating orthopaedic trainees in systems of

fracture classification is highly valuable, for many systems are

devised from the mechanism of injury or from the anatomical alignment

of the fracture fragments. These are important educational tools to

assist orthopaedic trainees in better understanding the osteology of

different parts of the skeleton and the various mechanisms of injury

that can result in fractures. Educational systems using fracture

classification methodologies can assist orthopaedic trainees in

formulating a context in which to make treatment decisions, and can

also provide an important historical context of fracture care and

fracture classification in orthopaedics.

treatment, and it is clearly the intent of many fracture classification

systems to do so. It is unclear, however, from much of the literature

that has been published, whether fracture classification systems are

valid tools to guide treatment. The fact that there is so much observer

variability in fracture classification adds an element of doubt to

comparative clinical studies that have used fracture classification as

a guide to treatment.

be useful in predicting outcomes following fracture care. The

orthopaedic literature to date, however, does not seem to clearly

indicate that fracture classification systems can be used to predict

patient outcomes in any sort of valid or reproducible way. The

interobserver variability of many fracture classification systems is

one of the key reasons that the literature cannot clearly show this

correlation. One exception to this, however, is that most fracture

classification system have good reliability in characterizing the most

severe and least severe injuries—those that correlate with the best and

worst outcomes. It is in the midrange of injury severity that

classification systems demonstrate the poorest reliability and the

poorest ability to predict outcomes.

determination of injury severity than merely classifying a fracture

according to plain radiographs. It has become clear in recent years

that variables other than radiograph appearance of the fracture play a

huge role in determining patient outcome, and these variables will be

utilized in new systems of determining injury severity in patients with

fractures. Objective measures of energy of injury include CT scans,

finite element models or volumetric measures,

measures

of the extent of injury to soft tissues, objective measures of the

patient’s physiologic reserve and response to injury, and serum lactate

levels. An assessment of overall health status and the existence of

comorbid conditions are other ways that may be used to make more

comprehensive the determination of fracture severity. These factors

will likely be combined with the radiographic appearance of the

fracture to better guide treatment and to better predict outcomes of

fracture care.

and more reliably determining and characterizing the injury severity in

patients with fractures. Newer uses for CT scanning and MRI imaging and

ultrasound will be instrumental in providing the treating surgeon more

information about the extent of soft tissue injury, the health of the

bone and cartilage, and the biology at the fracture site. In addition,

we may gain additional information about the patient’s ability to heal

well. All of these will advance the orthopaedist’s ability to determine

injury severity. For example it is possible with very high-energy MRI

scans to determine the proteoglycan content of articular cartilage.

Since articular cartilage is not imaged on CT or plain radiographic

imaging, its health has been generally excluded from the classification

of fractures. However, the long-term health of the articular cartilage

is crucial to the patient’s outcome following a severe articular

injury. In the future, the ability to use advanced imaging modalities

to better characterize the current health and predict the future health

of the articular cartilage will be a great advancement in our ability

to accurately classify fractures and to use fracture classification as

a predictive measure.

that will better assure that fractures can be measured and

characterized on a continuum, which is how they occur. These new

classification systems will better represent the continual aspects of

injury severity than do systems in use today, many of which were based

simply on anatomical consideration rather than on injury severity.

Ideas such as rank-ordering fractures, putting fractures on a

continuum, sending fractures to a fracture classification clearing

house (for classification by one or just a few observers) are but a few

possible future approaches to advancing and making more reproducible

the classification of fractures.

process of validation a fracture classification system should undergo

before becoming available for general use. Most classification systems

in general use have had no formal validation. Most of them have come

into general use because of the reputation or influence of the person

or group that devised them, or perhaps because the system has been in

use so long that it has simply become part of the vernacular in

fracture classification and fracture care. One study has proposed a

formal, detailed, and very time-consuming methodology for the

validation of all fracture classification systems, very similar to that

which was performed for patient-based outcome measures, such as the

short form 36 and the musculoskeletal functional assessment.4

It is as of yet unclear whether such validation methods would improve

the interobserver reliability of fracture classification systems. It is

clear, however, that such methods would be exhaustive and very time

consuming, and that many orthopaedic surgeons do not believe that such

detailed validation is necessary for fracture classification systems.

will advance our understanding and ability to classify fractures.

Advances in imaging processes and image analysis, perhaps when coupled

with neural nets and other learning technologies, may make it possible

for computers to be taught to classify fractures with a high degree of

reliability and reproducibility. One could envision a system by which

digital images of a fracture are classified according to any of several

classification systems and that will be done automatically by a

computer system at the time the radiographs are obtained, much as

electrocardiograph (EKG) readings are currently generated by a computer

at the time the patient’s cardiac tracing is obtained.

fracture classification systems. Rigorous statistical methods—or at

least consensus statistical methodologies—will be developed and

implemented that, while detailed, time consuming, and involved, will

result in greatly improved validation of many fracture classification

systems.

DJ, Blair WF, Steyers CM, et al. Classification of distal radius

fractures: an analysis of interobserver reliability and intraobserver

reproducibility. J Hand Surg 1996; 21A:574-582.

E, Jorgensen LG, Hededam LT. Evans classification of trochanteric

fractures: an assessment for the interobserver reliability and

intraobserver reproducibility. Injury 1990;21:377-378.

GR, Rasmussen JB, Dahl B, et al. Older’s classification of Colle

fractures: good intraobserver and interobserver reproducibility in 185

cases. Acta Orthop Scan 1991; 62:463-464.

L, Bhandari M, Kellam J. How reliabile are reliability studies of

fracture classifications? A systematic review of their methodologies.

Acta Orthop Scand 2004;75:184-194.

L, Anderson J, Chesnut R, et al. Reliability and reproducibility of

dens fracture classification with use of plain radiography and

reformatted computer-aided tomography. J Bone Joint Surg Am

2006;88:106-112.

PE, Dorey FJ, Matta JM. Letournel classification for acetabular

fractures: assessment of interobserver and intraobserver reliability. J

Bone Joint Surg Am 2003;85: 1704-1709.

J, Adler LM, Blank JE, et al. Evaluation of the Neer system of

classification of proximal humeral fractures with computerized

tomographic scans and plain radiographs. J Bone Joint Surg Am

1996;78:1371-1375.

J Jr, Goldfarb C, Catalano L, et al. Assessment of articular fragment

displacement in acetabular fractures: a comparison of computed

tomography and plain radiographs. J Orthop Trauma 2002;16:449-456.

S, Bagger J, Sylvest A, et al. Low agreement among 24 doctors using the

Neer classification; only moderate agreement on displacement, even

between specialists. International Orthop 2002;26:271-273.

RJ, Jones AL. Interobserver agreement in the classification of open

fractures of the tibia. J Bone Joint Surg Am 1994;76:1162-1166.

PSH, Klimkiewicz JJ, Luchette WT, et al. Impact of CT scan on treatment

plan and fracture classification of tibial plateau fractures. J Orthop

Trauma 1997;11:484-489.

RJ, Bindra RR, Evanoff BA, et al. Radiographic evaluation of osseous

displacement following intraarticular fracture of the distal radius:

reliability of plain radiographs versus computed tomography. J Hand

Surg (Am) 1997;22:792-800.

III, WL, Dirschl DR. An assessment of the effectiveness of binary

decision-making in improving the reliability of the AO/ASIF

classification of fractures of the ankle. J Orthop Trauma

1998;12:280-284.

HC, Franck WM, Sabauri G, et al. Incorrect classification of

extra-articular distal radius fractures by conventional x-rays:

comparison between biplanar radiologic diagnostics and CT assessment of

fracture morphology. Unfallchirurg 2004;107(6):491-498.

TA, Willis MC, Marsh JL, et al. Rank order analysis of tibial plafond

fracture: does injury or reduction predict outcome? Foot and Ankle

International 1999;20:44-49.

VV, Davis TRC, Barton NJ. The prognostic value and reproducibility of

the radiological features of the fractured scaphoid. J Hand Surg Br

1999;5:586-590.

DR, Ferry ST. Reliability of classification of fractures of the tibial

plafond according to a rank order method. J Trauma 2006;61:1463-1466.

DR, Adams GL. A critical assessment of methods to improve reliability

in the classification of fractures, using fractures of the tibial

plafond as a model. J Orthop Trauma 1997;11:471-476.

DR, Marsh JL, Buckwalter J, et al. The clinical and basic science of

articular fractures. Accepted by J American Acad Orthop Surg, September

2002, 30 pages.

J, Lindenhovius A, Kloen P, et al. Two- and three-dimensional computed

tomography for the classification and management of distal humeral

fractures. J Bone Joint Surg Am 2006;88:1795-1801.

PA, Andersen E, Madsen F, et al. Garden classification of femoral neck

fractures: an assessment of interobserver variation. J Bone Joint Surg

Br 1988;70:588-590.

RB, Anderson JT. Prediction of infection in the treatment of 1025 open

fractures in long bones. J Bone Joint Surg 1976;58A:453-458.

RB, Mendoza RM, Williams DN. Problems in the management of Type III

(severe) open fractures: a new classification of type III open

fractures. J Trauma 1984;24(8): 742-746.

RB, Merkow RL, Templeman D. Current concepts review: the management of

open fractures. J Bone Joint Surg Am 1990;72:299-303.

CA, Dirschl DR, Ellis TJ. Interobserver reliability of a CT-based

fracture classification system. J Orthop Trauma 2005;19:616-622.

MA, Beredjiklian PK, Bozentka DJ, et al. Computed tomography scanning

of intraarticular distal radius fractures: does it influence treatment?

J Hand Surg Am 2001;26(3): 415-421.

HJ, Hanel DP, McKee M, et al. Consistency of AO fracture classification

for the distal radius. J Bone Joint Surg Br 1996;78:726-731.

B, Andersen ULS, Olsen CA, et al. The Neer classification of fractures

of the proximal humerus: an assessment of interobserver variation.

Skeletal Radiol 1988;17: 420-422.

AJ, Inda DJ, Bott AM, et al. Interobserver and intraobserver

reliability of two classification systems for intra-articular calcaneal

fractures. Foot and Ankle International 2006;27:251-255.

JL, Buckwalter J, Gelberman RC, et al. Does an anatomic reduction

really change the result in the management of articular fractures? J

Bone Joint Surg 2002;84-A: 1259-1271.

JS, Marsh JL, Bonar SK, et al. Assessment of the AO/ASIF fracture

classification for the distal tibia. J Orthop Trauma 1997;11:477-483.

J, Marsh JL, Nepola JV, et al. Radiographic fracture assessments: which

ones can we reliably make? J Orthop Trauma 2000;14(6):379-385.

RB, Carr SEW, Watson JT. Open reduction and internal fixation of

posterior wall fractures of the acetabulum. Clin Orthop and Rel Res

2000;377:57-67.

ME. The comprehensive classification of fractures of long bone. In:

Muller ME, Allgower M, Schneider R, et al, eds. Manual of Internal

Fixation: Techniques Recommended by the AO-ASIF Group. 3rd Ed.

Heidelberg: Springer-Verlag, 1991.

JO, Dons-Jensen H, Sorensen HT. Lauge-Hansen classification of

malleolar fractures: an assessment of the reproducibility of 118 cases.

Acta Orthop Scand 1990;61: 385-387.

DA, Jackson KR, Davies MR, et al. The impact of the Garden

classification on proposed operative treatment. Clin Orthop and Rel Res

2003;409:232-240.

HJ, Txcherne H. Pathophysiology and classification of soft tissue

injuries associated with fractures. In: Tscherne H, ed. Fracture With

Soft Tissue Injuries. New York: Springer-Verlag, 1984:1-9.

FC, Ramos LMP, Simmermacher RKJ, et al. Classification of thoracic and

lumbar spine fractures: problems of reproducibility. Eur Spine J

2002;11:235-245.

H, Parker MJ, Pryor GA, et al. Classification of trochanteric fracture

of the proximal femur: a study of the reliability of current systems.

Injury 2002;33:713-715.

BA, Bhandari M, Orr RD, et al. Improving reliability in the

classification of fractures of the acetabulum. Arch Orthop Trauma Surg

2003;123:228-233.

S, Madsen PV, Bennicke K. Observer variation in the Lauge-Hansen

classification of ankle fractures: precision improved by instruction.

Actat Orthop Scand 1993; 64:693-694.

RD, Schork MA. Statistics with Applications to the Biological and

Health Sciences. Englewood Cliffs, NJ: Prentice-Hall, 1970.

J. Fractures of the tibial plateau. In Schatzker M, Tile M, eds.

Rationale of Operative Fractures Care. Berlin: Springer-Verlag,

1988:279-295.

IB, Steyerberg EW, Castelein RM, et al. Reliability of the AO/ASIF

classification for peritrochanteric femoral fractures. Acta Orthop

Scand 2001;72:36-41.

LE, Zalavras CG, Jaki K, et al. Gunshot femoral shaft fractures: is the

current classification system reliable? Clin Orthop and Rel Res

2003;408:101-109.

JL, Zuckerman JD, Lyon T, et al. The Neer classification system for

proximal humeral fractures: an assessment of interobserver reliability

and intraobserver reproducibility. J Bone Joint Surg Am

1993;75:1745-1750.

KA, Gerber C. The reproducibility of classification of fractures of the

proximal end of the humerus. J Bone Joint Surg Am 1993;75:1751-1755.

H, Starklint H, Gundersen HJ, et al. Reproducibility of

histomorphologic diagnoses with special reference to the kappa

statistic. APMIS 97 1989;689-698.

JF, Agel J, McAndrew MP, et al. Outcome validation of the AO/OTA

fracture classification system. J Orthop Trauma 2000;14:534-541.

JF, Sands AK, Agel J, et al. Interobserver variation in the AO/OTA